Fiction and Reality

Fiction and Reality

I awoke today with a sentence stuck in my mind.

Fantasie bedeutet sich das Zukünftige richtig vorzustellen.

Imagination means properly envisioning the future.

I was sure I read it a long time ago, but could not quite think of the author, but my best guess was the Swiss writer Ludwig Hohl and after some recherche I finally found the not quite literal passage.

What I understand by imagination – the highest human activity – (…) is the ability to correctly envision another situation. (…) ‘Correct’ here is what withstands the practical test.

(The Notes, XII.140)

The most important thing about imagination is contained in these two sentences:

1.Imagination is the ability to correctly envision distant (different) circumstances – not incorrectly, as is often assumed (because anyone could do that).

2.Imagination is not, as is often assumed, a luxury, but one of the most important tools for human salvation, for life.

(The Notes XII.57)

The Phantastic and the Prophetic (Predictive) Mind draw from the same source, but with different Instruments and Intentions.

Fiction and Reality: Both valid states of the mind. Reality does what Simulation imagines.

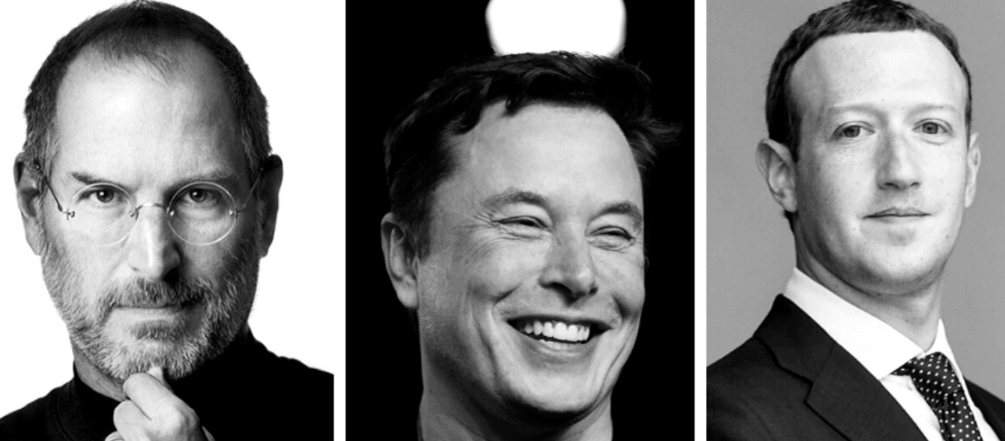

Visions are controlled Hallucinations.

Own Experiences

In 2004, I penned an unpublished novel titled “The Goldberg Variant.” In it, I explored the notion of a Virtual Person, a recreation of an individual based on their body of work, analyzed and recreated by machine intelligence. Schubert 2.0 was one of the characters, an AI-powered android modeled after the original Schubert, interestingly I came up with the term Trans-Person, which I then borrowed from Grofs transpersonal psychology, not even imagining the identity wars of the present. This android lived in a replicated 19th-century Vienna and continued to compose music. This setting, much like the TV series Westworld, allowed human visitors to immerse themselves in another time.

I should note that from ages 8 to 16, I was deeply engrossed in science fiction. It’s possible that these readings influenced my later writings, even if I wasn’t consciously drawing from them.

Within the same novel, a storyline unfolds where one of the characters becomes romantically involved with an AI. The emotional maturation of this AI becomes a central theme. My book touched on many points that resonate with today’s discussions on AI alignment, stemming from my two-decade-long research into AI and extensive sci-fi readings.

The novel’s titular character experiences a unique form of immortality. Whenever the music J.S. Bach composed for him is played, he is metaphorically resurrected. Yet, this gift also torments him, leading him on a violent journey through time.

Years later, I came across the term “ancestor simulation” by Nick Bostrom. More recently, I read about the origins of one of the first AI companion apps, conceived from the desire to digitally resurrect a loved one. I believe Ray Kurzweil once expressed a similar sentiment, hoping to converse with a digital representation of his late father using AI trained on his father’s writings and recordings. Just today, I heard Jordan Peterson discussing a concept eerily similar to mine.

Kurzweils track record

Predictions Ray Kurzweil Got Right Over the Last 25 Years:

1. In 1990, he predicted that a computer would defeat a world chess champion by 1998. IBM’s Deep Blue defeated Garry Kasparov in 1997.

2. He predicted that PCs would be capable of answering queries by accessing information wirelessly via the Internet by 2010.

3. By the early 2000s, exoskeletal limbs would let the disabled walk. Companies like Ekso Bionics have developed such technology.

4. In 1999, he predicted that people would be able to talk to their computer to give commands by 2009. Technologies like Apple’s Siri and Google Now emerged.

5. Computer displays would be built into eyeglasses for augmented reality by 2009. Google started experimenting with Google Glass prototypes in 2011.

6. In 2005, he predicted that by the 2010s, virtual solutions would do real-time language translation. Microsoft’s Skype Translate and Google Translate are examples.

Ray’s Predictions for the Next 25 Years:

1. By the late 2010s, glasses will beam images directly onto the retina. Ten terabytes of computing power will cost about $1,000.

2. By the 2020s, most diseases will go away as nanobots become smarter than current medical technology. Normal human eating can be replaced by nanosystems. The Turing test begins to be passable. Self-driving cars begin to take over the roads.

3. By the 2030s, virtual reality will begin to feel 100% real. We will be able to upload our mind/consciousness by the end of the decade.

4. By the 2040s, non-biological intelligence will be a billion times more capable than biological intelligence. Nanotech foglets will be able to make food out of thin air and create any object.

5. By 2045, we will multiply our intelligence a billionfold by linking wirelessly from our neocortex to a synthetic neocortex in the cloud.

These predictions are based on Kurzweil’s understanding of the power of Moore’s Law and the exponential growth of technologies. It’s important to note that while some of these predictions may seem far-fetched, Kurzweil has a track record of making accurate predictions in the past.

It’s fascinating how someone like Kurzweil displayed what seems like clairvoyance with his predictions about the Singularity Now, looking back, it almost feels supernatural. When I learned that Jordan Peterson had embarked on a project training an AI using the “Spirit of the King James Bible” to engage in conversation, I was reminded of the notion that Science and Religion might be parallel paths that intersect in the infinite.

Kurzweil’s case is particularly intriguing because his own assessment of his predictions significantly diverges from the public‘s perspective. He should have predicted that too, obviously.

When we pass away, is it a form of resurrection every time someone refers to our writings? The idea that we persist in others’ memories could be more than just a metaphor. What happens if, long after my physical body and mind have succumbed to the passage of time, my descendants consult my digital avatar about the era when Artificial General Intelligence first emerged?

Vernes Track Record

It is astounding how many Predictions Jules Verne the Father of Sci-Fi got right: It practically seems that he could see well over a century in the future. It is also interesting that he did not seem to get the timeline right, he was way too conservative to see the exponential curve of technologies that bootstrap from each other.

When exploring this topic with ChatGPT it came up with the following list:

Jules Verne, known as the father of science fiction, was a French novelist who wrote in the 19th century. His works are full of imaginative inventions that were far ahead of his time. Some of these have since become reality, while others remain purely speculative. Here are ten such inventions, with their modern counterparts and an assessment of their accuracy:

Electric Submarines – 20,000 Leagues Under the Sea (1870): Verne’s submarine, the Nautilus, was powered by electricity long before real submarines were. The first fully functional and practical submarines were indeed powered by diesel and electricity, but that wasn’t until the early 20th century. This was a remarkably accurate prediction. Accuracy: 90% (The only real difference is that the Nautilus was far more advanced than early real-life submarines.)

Newscasts – In the Year 2889 (1889): Verne imagined a world where news was delivered to individual homes via technology. Today, we have 24-hour news channels, internet news sites, and social media feeds. Accuracy: 100%

Video Conferencing – In the Year 2889 (1889): Verne predicted a device he called a “phonotelephote,” which allowed for “the transmission of images by means of sensitive mirrors connected by wires.” This is remarkably similar to our video conferencing technology today. Accuracy: 100%

Lunar Modules – From the Earth to the Moon (1865): Verne wrote about a capsule that could carry passengers to the moon. This became a reality in 1969 when Apollo 11 landed on the moon. However, Verne’s method of propulsion (a giant cannon) was not accurate. Accuracy: 70% (The concept of a vehicle traveling to the moon was correct, but the propulsion method was not.)

Tasers – 20,000 Leagues Under the Sea (1870): Verne describes a hunting rifle that shoots electric bullets in this book. Today, we have Tasers that incapacitate targets using electricity. Accuracy: 80% (While a Taser isn’t a rifle, it does deliver an electric charge to a target, which aligns with Verne’s concept.)

Helicopters – Robur the Conqueror (1886): Verne describes a flying machine that uses propellers to create lift. While the real-world helicopter came about differently and had many more challenges to overcome than Verne’s model, the basic concept was there. Accuracy: 60% (The basic principle of lift from rotary wings was correct, but the implementation was oversimplified.)

Electrically Lit Cities – Paris in the Twentieth Century (1863): Verne predicted cities would be lit by electricity, which became true with the widespread use of electric lighting. Accuracy: 100%

Skywriting – Five Weeks in a Balloon (1863): Verne describes a scenario in which messages are written in the sky, a precursor to today’s skywriting. Accuracy: 100%

The Internet – Paris in the Twentieth Century (1863): Verne describes a global network of computers that enables instant communication. This could be seen as a prediction of the internet, but the way it functions and its role in society are not very accurate. Accuracy: 50% (The existence of a global communication network is correct, but the specifics are quite different.)

Sidenote: I heard an anecdote that Edison would put himself in a kind of hypnagogic trance to come up with new inventions, he had a scribe with him that was writing down what he murmured in this state.

Bush’s Track Record

Vannevar Bush’s essay “As We May Think,” was published in The Atlantic in 1945.

“As We May Think” is a seminal article envisioning the future of information technology. It introduces several groundbreaking ideas.

Associative Trails and Linking: Bush discusses the idea of associative indexing, noting that the human mind operates by association. With one item in its grasp, it snaps instantly to the next that is suggested by the association of thoughts. He describes a system in which every piece of information is linked to other relevant information, allowing a user to navigate through data in a non-linear way. This is quite similar to the concept of hyperlinks in today’s world wide web.

Augmenting Human Intellect: Bush proposes that the use of these new tools and technologies will augment human intellect and memory by freeing the mind from the tyranny of the past, making all knowledge available and usable. It will enable us to use our brains more effectively by removing the need to memorize substantial amounts of information.

Lems Track record

The main difference between Nostradamus, the oracle of Delphi and actual Prophets is that we get to validate their predictions.

Take Stanislaw Lem:

E-books: Lem wrote about a device similar to an e-book reader in his 1961 novel “Return from the Stars”. He described an “opton”, which is a device that stores content in crystals and displays it on a single page that can be changed with a touch, much like an e-book reader today.

Audiobooks: In the same novel, he also introduced the concept of “lectons” – devices that read out loud and could be adjusted according to the desired voice, tempo, and modulation, which closely resemble today’s audiobooks.

Internet: In 1957, Lem predicted the formation of interconnected computer networks in his book “Dialogues”. He envisaged the amalgamation of IT machines and memory banks leading to the creation of large-scale computer networks, which is akin to the internet we know today.

Search Engines: In his 1955 novel “The Magellanic Cloud”, Lem described a massive virtual database accessible through radio waves, known as the “Trion Library”. This description is strikingly similar to modern search engines like Google.

Smartphones: In the same book, Lem also predicted a portable device that provides instant access to the Trion Library’s data, similar to how smartphones provide access to internet-based information today.

3D Printing: Lem described a process in “The Magellanic Cloud” that is similar to 3D printing, where a device uses a ‘product recipe’ to create objects, much like how 3D printers use digital files today.

Simulation Games: Lem’s novel “The Cyberiad” is said to have inspired Will Wright, the creator of the popular simulation game “The Sims”. The novel features a character creating a microworld in a box, a concept that parallels the creation and control of a simulated environment in “The Sims”.

Virtual Reality: Lem conceptualized “fantomatons”, machines that can create alternative realities almost indistinguishable from the actual ones, in his 1964 book “Summa Technologiae”. This is very similar to the concept of virtual reality (VR) as we understand it today. Comparing Lem’s “fantomaton” to today’s VR, we can see a striking resemblance. The fantomaton was a machine capable of generating alternative realities that were almost indistinguishable from the real world, much like how VR immerses users in a simulated environment. As of 2022, VR technology has advanced significantly, with devices like Meta’s Oculus Quest 2 leading the market. The VR industry continues to grow, with over 13.9 million VR headsets expected to ship in 2022, and sales projected to surpass 20 million units in 2023.

Borges’ Track record

Also, Jorge Luis Borges is not known as a classic sci fi author many of his stories can be understood as parables of current technological breakthroughs.

Jorge Luis Borges was a master of metaphors and allegories, crafting intricate and thought-provoking stories that have been analyzed for their philosophical and conceptual implications. Two of his most notable works in this context are “On Exactitude in Science” and “The Library of Babel”.

“On Exactitude in Science” describes an empire where the science of cartography becomes so exact that only a map on the same scale as the empire itself would suffice. This story has been seen as an allegory for simulation and representation, illustrating the tension between a model and the reality it seeks to capture. It’s about the idea of creating a perfect replica of reality, which eventually becomes indistinguishable from reality itself.

“The Library of Babel” presents a universe consisting of an enormous expanse of hexagonal rooms filled with books. These books contain every possible ordering of a set of basic characters, meaning that they encompass every book that has been written, could be written, or might be written with slight permutations. While this results in a vast majority of gibberish, the library must also contain all useful information, including predictions of the future and biographies of any person. However, this abundance of information renders most of it useless due to the inability to find relevant or meaningful content amidst the overwhelming chaos.

These stories certainly bear some resemblance to the concept of large language models (LLMs) like GPT-3. LLMs are trained on vast amounts of data and can generate a near-infinite combination of words and sentences, much like the books in the Library of Babel. However, just as in Borges’ story, the vastness of possible outputs can also lead to nonsensical or irrelevant responses, reflecting the challenge of finding meaningful information in the glut of possibilities.

As for the story of the perfect map, it could be seen as analogous to the aspiration of creating a perfect model of human language and knowledge that LLMs represent. Just as the map in the story became the same size as the territory it represented, LLMs are models that aim to capture the vast complexity of human language and knowledge, creating a mirror of reality in a sense.

Borges also wrote a piece titled “Ramón Llull’s Thinking Machine” in 1937, where he described and interpreted the machine created by Ramon Llull, a 13th-century Catalan poet and theologian.

The machine that Borges describes is a conceptual tool, a sort of diagram or mechanism for generating ideas or knowledge. The simplest form of Llull’s machine, as described by Borges, was a circle divided nine times. Each division was marked with a letter that stood for an attribute of God, such as goodness, greatness, eternity, power, wisdom, love, virtue, truth, and glory. All of these attributes were considered inherent and systematically interrelated, and the diagram served as a tool to contemplate and generate various combinations of these attributes.

Borges then describes a more elaborate version of the machine, consisting of three concentric, manually revolving disks made of wood or metal, each with fifteen or twenty compartments. The idea was that these disks could be spun to create a multitude of combinations, as a method of applying chance to the resolution of a problem. Borges uses the example of determining the “true” color of a tiger, assigning a color to each letter and spinning the disks to create a combination. Despite the potentially absurd or contradictory results this could produce, Borges notes that adherents of Llull’s system remained confident in its ability to reveal truths, recommending the simultaneous deployment of many such combinatory machines.

Llull’s own intention with this system was to create a universal language using a logical combination of terms, to assist in theological debates and other intellectual pursuits. His work culminated in the completion of “Ars generalis ultima” (The Ultimate General Art) in 1308, in which he employed this system of rotating disks to generate combinations of concepts. Llull believed that there were a limited number of undeniable truths in all fields of knowledge, and by studying all combinations of these elementary truths, humankind could attain the ultimate truth.

14 Entertaining Predictions for the next 3 years

At this point I will make some extremely specific predictions about the future, especially the entertainment industry. In 2026 I will revisit this blog and check how I did.

2023: Music Industry

1.Paul McCartney releases a song either by or in tribute to John Lennon, co-created with AI.

2024: Music Industry

2. A new global copyright regulation titled “The Human Creative Labor Act” will be introduced, safeguarding human creators against unauthorized use of their work. This act will serve as a pivotal test for human-centered AI governance.

3.Various platforms will emerge with the primary intention of procuring works from deceased artists not yet in the public domain.

4.The music industry, in collaboration with the estates of deceased artists, will produce their inaugural artificial albums. These albums will utilize the voices and styles of late pop stars, starting with Michael Jackson.

5.The industry will launch AI-rendered renditions of cover songs, such as Michael Jackson performing Motown hits from the 1950s or Elvis singing contemporary tracks.

6.Post the demise of any celebrated artist, labels will instantly secure rights to produce cover albums using AI-trained voice models of the artist.

2025: Music Industry

7. Bands will initiate tours featuring AI-generated vocal models of their deceased lead singers. A prime example could be Queen touring with an AI rendition of Freddie Mercury’s voice.

2023: Film Industry

8. Harrison Ford and Will Smith will appear on screen as flawless, younger versions of themselves.

2024: Film Industry

9. As they retire, several film stars will license their digital likenesses (voice, motion capture, etc.) to movie studios. Potential candidates include Harrison Ford, Samuel L. Jackson, Michael J. Fox, Bill Murray, Arnold Schwarzenegger, and Tom Cruise.

10.Movie studios will announce continuations of iconic franchises.

11.Film classics will undergo meticulous restoration, enhancing visuals to 8K and upgrading audio to crisp Dolby Digital. Probable candidates: The original Star Wars Trilogy and classic Disney animations such as Snow White and Pinocchio.

2025: Film Industry

12. Netflix will introduce a feature allowing users to select from a library of actors and visualize their favorite films starring those actors. For instance, viewers could opt for Sean Connery as James Bond across all Bond films, experiencing an impeccable cinematic illusion.

2026: Film Industry

13. Netflix will offer a premium service enabling viewers to superimpose their faces onto their preferred series’ characters, for an additional fee.

2025: Entertainment/Business Industry

14. Select artists and individuals will design and market a virtual persona. This persona will be tradeable on stock exchanges, granting investors an opportunity to acquire shares. A prime candidate is Elon Musk. Shareholders in “Elon-bot” could access a dedicated app for one-on-one interactions. The AI, underpinned by a sophisticated language model from x.ai, will be trained on Elon’s tweets, interviews, and public comments.